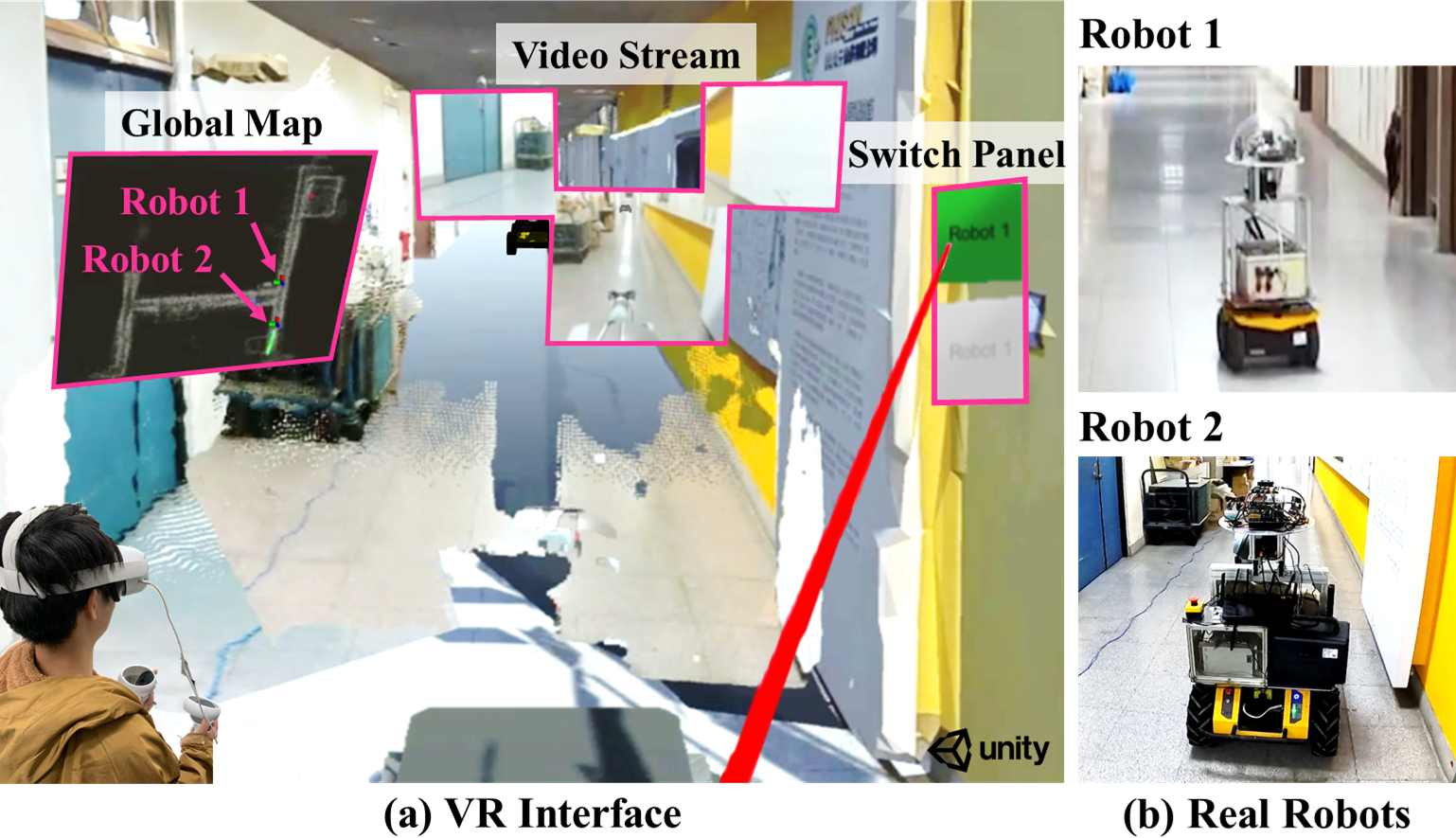

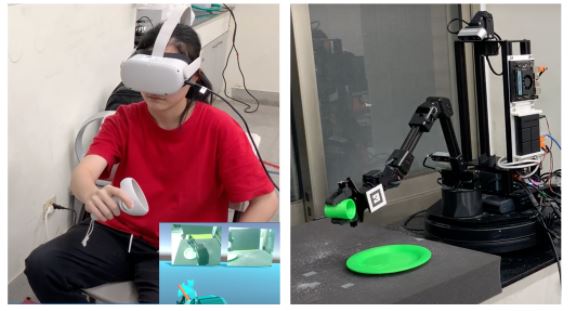

An Evaluation Framework of Human-Robot Teaming for Navigation among Movable Obstacles via Virtual Reality-based Interactions

Project Link

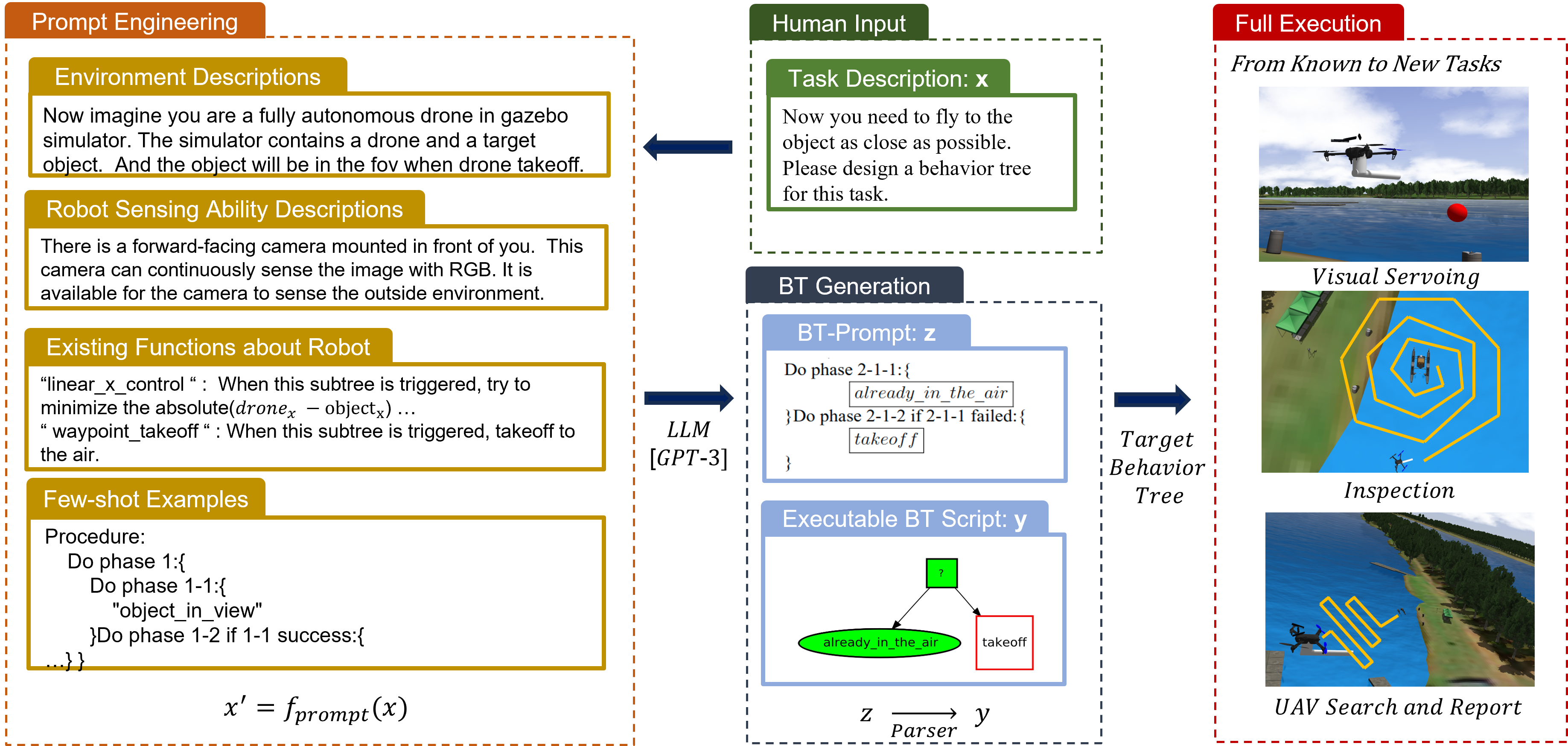

An High-level Task Planner using LLM-Generated Behavior Tree for Aerial Navigation and Manipulation

Project Link

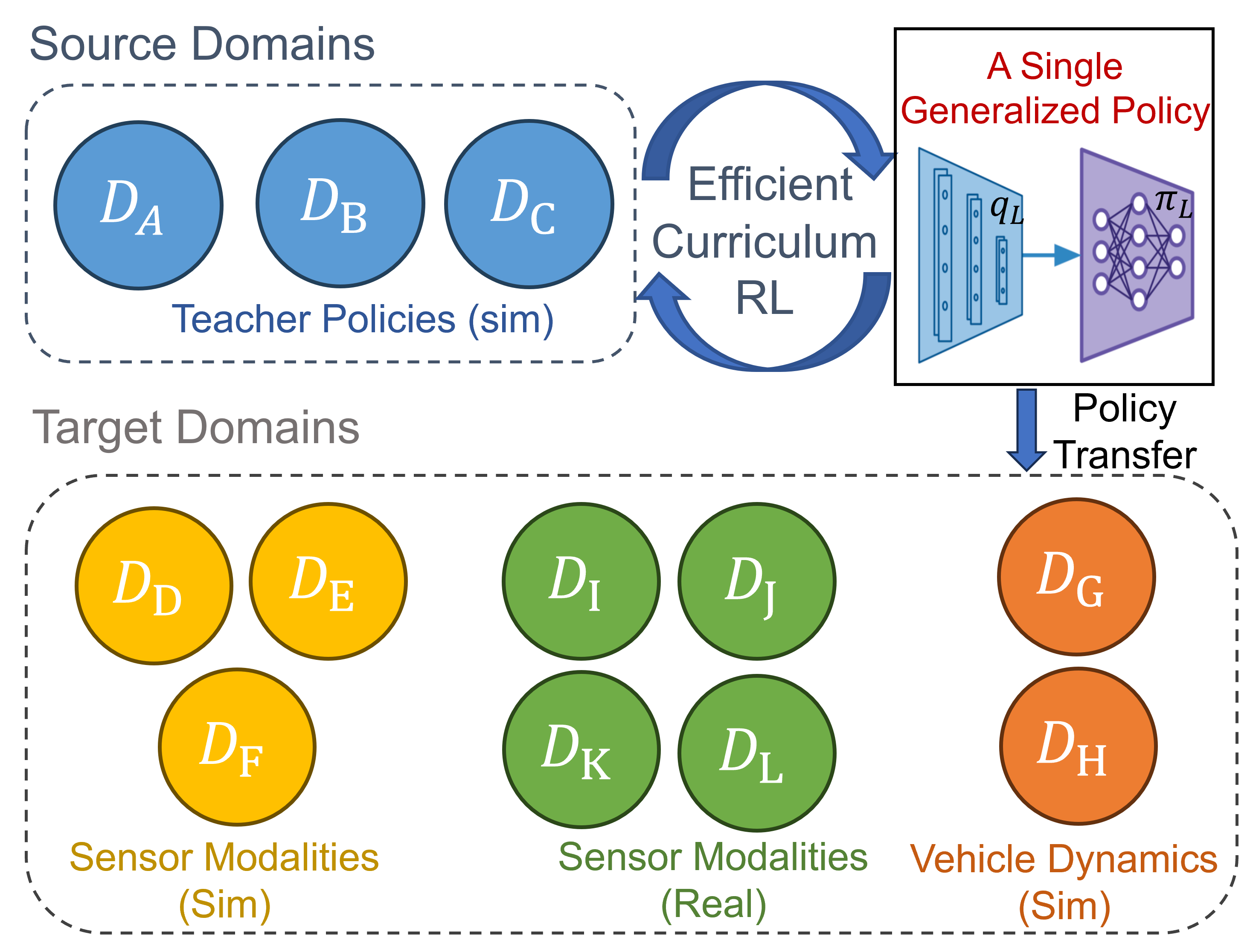

Efficient Transfer Reinforcement Learning of Single Policy for Robust Perception and Resource-constraint Robot Navigation

Project Link

Towards More Efficient EfficientDets and Real-Time Marine Debris Detection

Project Link

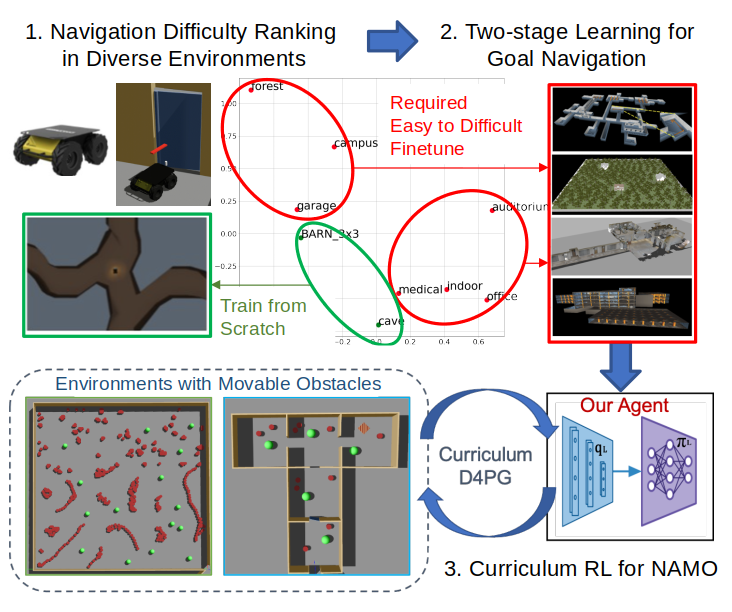

Curriculum Reinforcement Learning from Avoiding Collisions to Navigating among Movable Obstacles in Diverse Environments

Project Link

WFH-VR: Teleoperating a Robot Arm to set a Dining Table across the Globe via Virtual Reality

Project Link

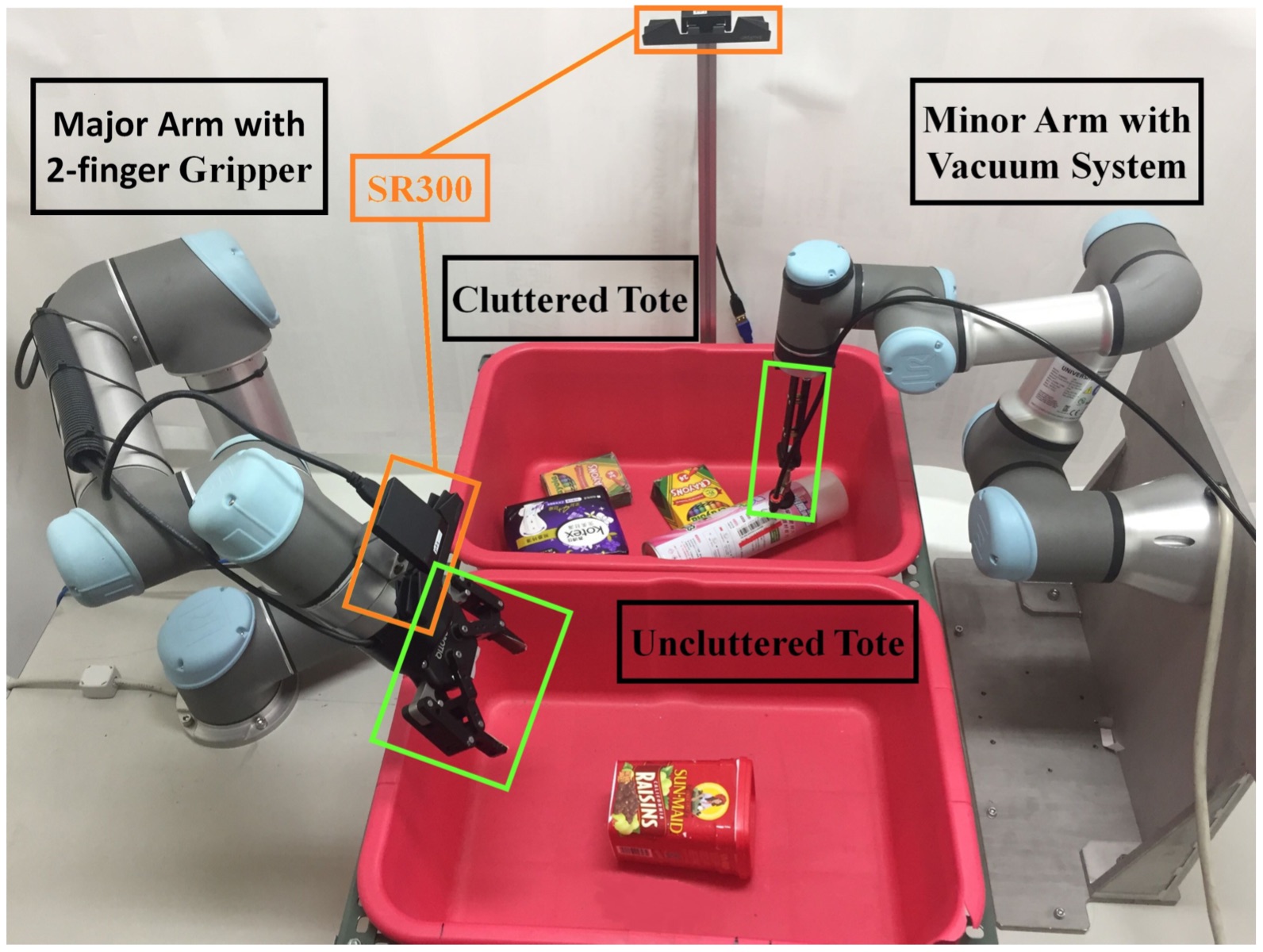

Fed-HANet: Federated Visual Grasping Learning for Human Robot Handovers

Project Link

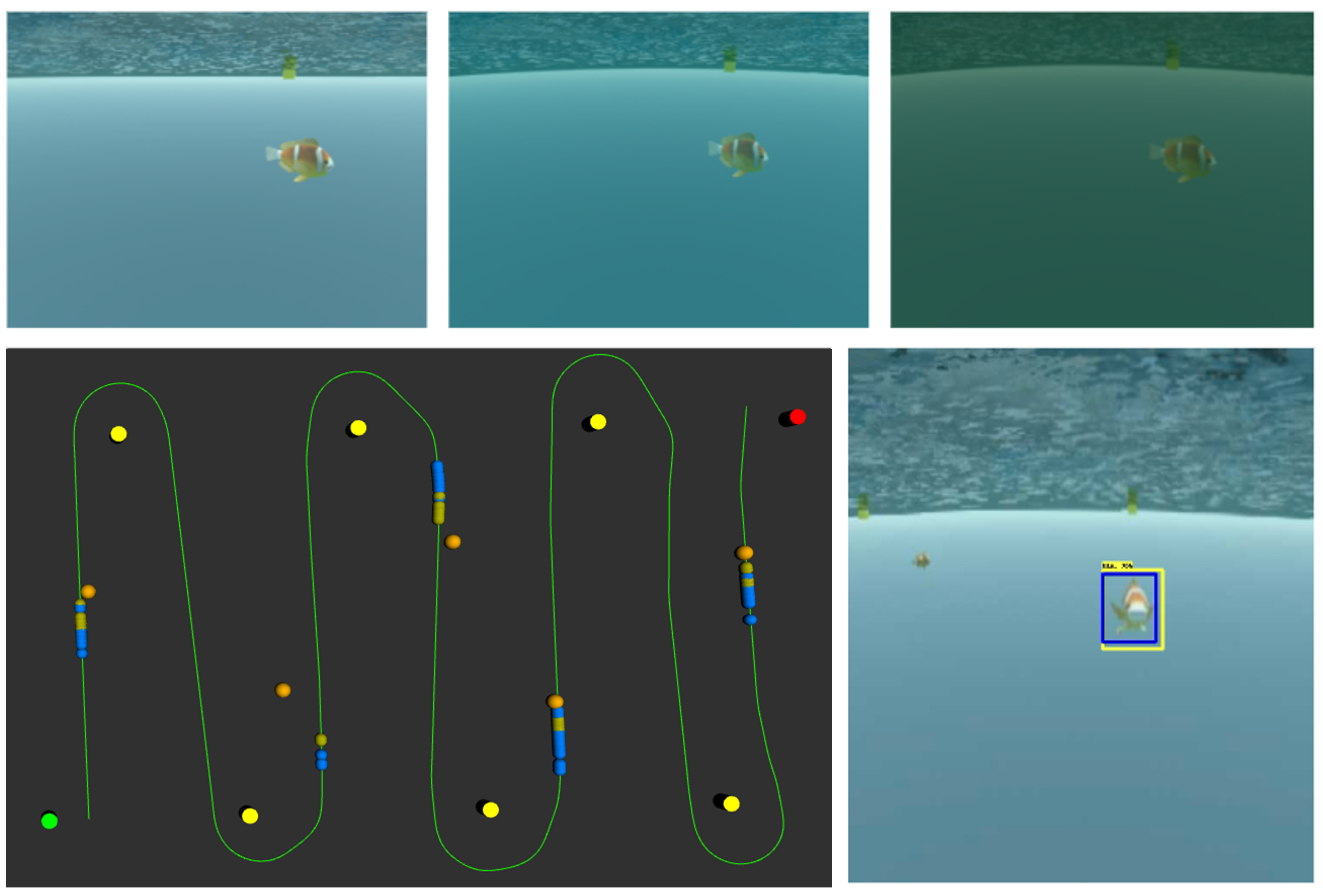

Duckiepond 2.0: an Education and Research Environment of Reinforcement Learning-based Navigation and Virtual Reality Human Interactions for a Heterogenous Maritime Fleet

Project Link

Assistive Navigation using Deep Reinforcement Learning Guiding Robot with UWB Beacons and Semantic Feedbacks for Blind and Visually Impaired People

Project Link

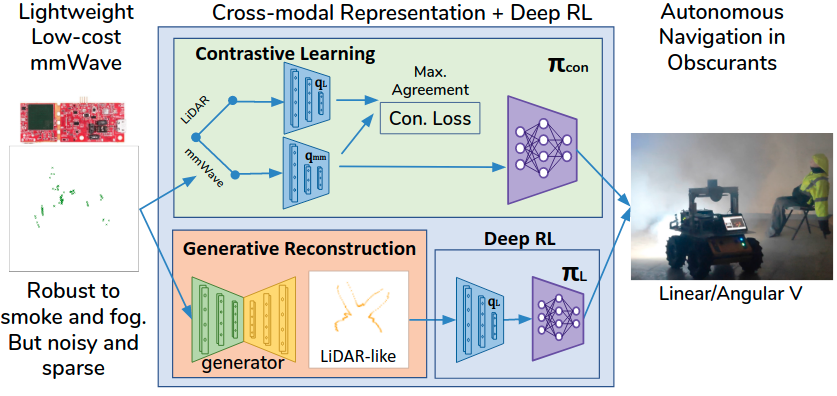

Cross-Modal Contrastive Learning of Representations for Navigation

using Lightweight, Low-Cost Millimeter Wave Radar for Adverse Environmental Conditions

Project Link

A Heterogeneous Unmanned Ground Vehicle and Blimp Robot Team for Search and Rescue using Data-driven Autonomy and Communication-aware Navigation

DARPA Subterranean Challengen

We joined DARPA Subterranean Challenge as Team NCTU. We developed an architecture and implement heterogeneous unmanned ground vehicles (UGVs) and blimp robot teams to navigate unknown terrains in subterranean environments for search and rescue missions.

Project Link Field Robotiscs paper linkDuckiepond

Duckiepond is an education and research development environment that includes software systems, educational materials, and of a fleet of autonomous surface vehicles Duckieboat. Duckieboats are designed to be easily reproducible with parts from a 3D printer and other commercially available parts, with flexible software that leverages several open source packages.

Project LinkBrandname-based Manipulation

Project Link

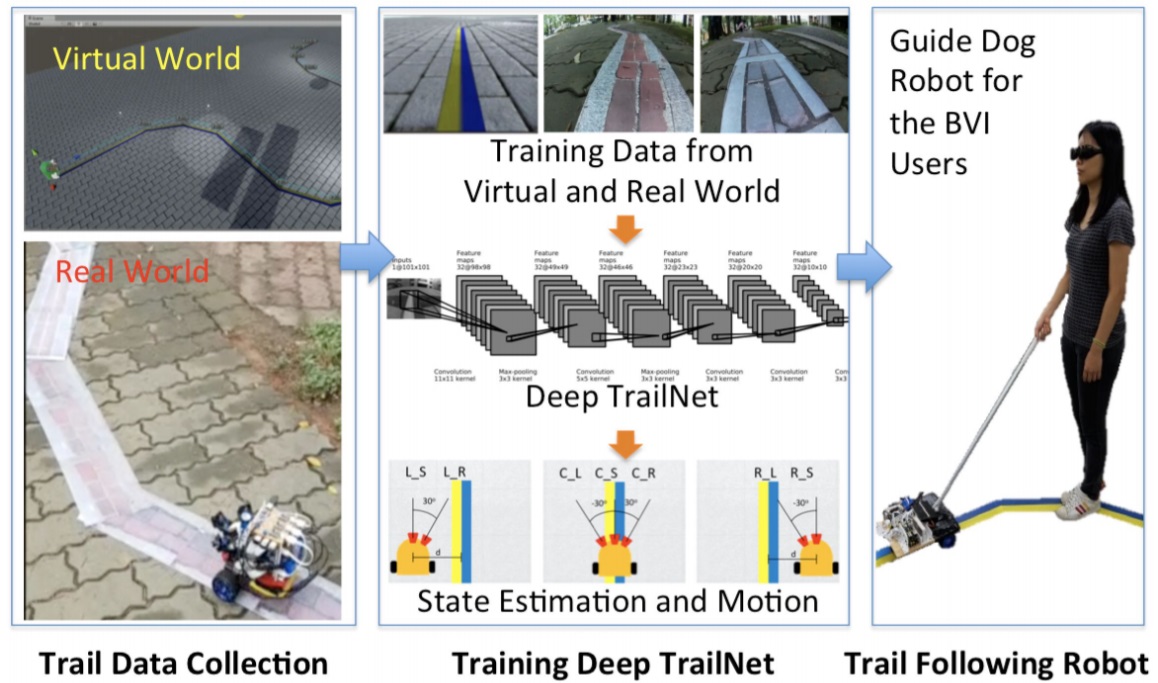

Robotic Guide Dog Project

We develop the robotic guide dog which used a vision-based learning approach to autonomously

follow man-made trails on various terrains of general pedestrian environments to enable

independent navigation for people who are blind or visually impaired (BVI).

Project Link

Duckietown: an Open, Inexpensive and Flexible Platform for Autonomy Education and Research

In Duckietown, inhabitants (duckies) are transported via an autonomous mobility service

(Duckiebots). Duckietown is designed to be inexpensive and modular, yet still enable many of

the research and educational opportunities of a full-scale self-driving car platform.

Project Link

Duckietown Summer School in 2017

Wearable Navigation System for the Blind and Visually Impaired

Our team develops wearable, miniaturized, energy-efficient, and soft robotic systems to provide key vision functionalities and navigation feedbacks for blind and visually impaired people. For safe mobility, we develop methods for independent awareness of obstacles, drop-offs, ascents, descents, and also important objects or landmarks. A haptic array and rapidly refreshable Braille device are used to deliver information about the surroundings. Poster Website (2014)

RobotX Competition

The inaugural RobotX competition was held in Singapore in Oct. 2014. The purpose of the competition was to challenge teams to develop new strategies for tackling unique and important problems in marine robotics. Placard detection is one of the tasks, and our key design objectives were: 1. Robustness to degradation caused by motion, scale and perspective transformation from different viewing positions, warp and occlusion caused by wind, and variants of color from light condition, 2. Speed and accuracy to support real-time decision-making. Ultimately the MIT/Olin team narrowly won first place in a competitive field. Paper MIT News

Spatially Prioritized and Persistent Text Detection and Decoding

Our method uses simultaneous localization and mapping (SLAM) to extract planar “tiles” repre- senting scene surfaces. This paper’s contributions include: 1) spatiotemporal fusion of tile observations via SLAM, prior to inspection, thereby improving the quality of the input data; and 2) combination of multiple noisy text observations into a single higher-confidence estimate of environmental text. Paper

The Attraction of Visual Attention to Texts in Real-World Scenes

Where can you more likely find text?

The present study was aimed at investigating its underlying factors of human visual attention attracted by texts. The following main results were obtained: (a) Greater fixation probability and shorter minimum fixation distance of texts confirmed the higher attractiveness of texts; (b) the locations where texts are typically placed contribute partially to this effect; (c) specific visual features of texts, rather than typically salient features (e.g., color, orientation, and contrast), are the main attractors of attention; (d) the meaningfulness of texts does not add to their attentional capture; and (e) the attraction of attention depends to some extent on the observer’s familiarity with the writing system and language of a given text. Paper

Predicting Semantic Transparency Judgments

Do you read “butter” when you read “butterfly”? Or “馬” for “馬虎”?

The morphological constituents of English compounds and two-character Chinese compounds may differ in meaning from the whole word. The proposed models successfully predicted participants’ transparency ratings, and the prediction is influenced by the differences in raters’ morphological processing in the different writing systems. The dominance of lexical meaning, semantic transparency, and the average similarity between all pairs within a morphological family are provided, and practical applications for future studies are discussed. Paper WebsiteDegraded Character Recognition

Can you read these sentence?

It is known that not all letters are of equal importance to the word recognition process, i.e., changing initial or exterior letters are more disruptchanging. Consistent results have been found for Chinese characters, and first-written strokes are more important for reading. This study compares the effects of high-level learnt stroke writting order and low-level features obtained via pixel-level singular value decompostion (SVD) to readers' degraded character recognition. Our results suggest that the most important segments determined by SVD, which has no information about stroke writing order, can identify first-written strokes. Furthermore, our results suggest that contour may be correlated with stroke writing order. Paper Slides