Ming-Fong Hsieh1, Wei-Li Liou1, Sun-Fu Chou1, Chang-Jin Wu1, Yu-Ting Ko1, Kuan-Ting Yu2, Hsueh-Cheng Wang*,1

_________________________________________________________________

1National Yang Ming Chiao Tung University (NYCU), Hsinchu, Taiwan.

2XYZ Robotics

Abstract

Advancements in large language models (LLMs) have facilitated their incorporation into human-operated high-level robotic task planners. Research on task planning involving LLMs has focused on generating sequential plans; however, such plans might be insufficient for complex applications. We investigated the effects of prompt engineering on behavior tree (BT) generation by LLMs to enhance the performance of LLMs in few-shot example tasks and to improve task success rates. First, we developed BT-Prompt, an example task format that maximizes the few-shot learning performance of LLMs. Experiments indicated that LLM-generated BTs were more effective for task completion than other formats in few-shot scenarios. Second, we demonstrated that BT-Prompt can facilitate designing hierarchical task structures, i.e., mid-level subtrees organizing a collection of low-level controllers. Experiments revealed that LLM-generated mid-level subtrees could achieve comparable success rates to those of hand-coded mid-level subtrees in complex tasks performed with various LLM backbones and under different temperature settings. LLMs with nonzero temperature were used to generate nondeterministic BTs for autonomously producing mid-level subtrees requiring minimal human guidance; these subtrees were tested through application in a series of challenging tasks. For these tasks, preexisting hand-coded mid-level subtrees were insufficient; however, integrating the LLM-generated subtrees with the preexisting hand-coded mid-level subtrees improved the task performance. Finally, the proposed system was successfully executed in virtual and real environments.

Video

Appendix A: BT-Prompt Generation and Translation Mechanism with Example for 3 Tasks

Two steps of translating pseudo-code z into executable BT script y

BTs Nodes Parsing

This initial phase involves deconstructing the pseudo-code into a raw symbolic tree, identifying the distinct subtrees.

The steps include:

- Parsing the Pseudo-Code Formatted BT into Tree Logic: This involves extracting the starting procedures of the tree from the provided responses and organizing the phases according to their layer.

- Structure and Classification: Here, the phases are classified based on their hierarchical relationships and conditions (indicating success or failure).

- Tree Construction: A tree structure is created based on the parsed information where node types (sequence, selector, parallel) are assigned based on the outcomes of the classification. These nodes are then interconnected to construct the overall tree architecture.

Subtree Assembly

In this subsequent step, the raw symbolic tree is further refined to become the final, executable symbolic BT.

This involves:

- Tree Analysis: Traversing the entire tree to categorize the different elements of tree logic and identify all the subtree titles.

- Subtree Replacement: This step involves substituting the placeholder subtree titles with actual subtrees, which contain specific conditions and actions pertinent to the behavior tree's functionality.

- Final Output: The end product is a fully executable symbolic BT, equipped with all necessary conditions and actions for deployment.

Three Tasks of GPT-3 BT Generation:

The input prompt, pseudo-code formatted BT, and translated executable BT script in Experiment 1 of GPT-3 generation are shown in below links:Visual Servoing

Inspection

Object Navigation

Appendix B: Low-level, Mid-level, and Final Tree of BT with Real-World Scenario

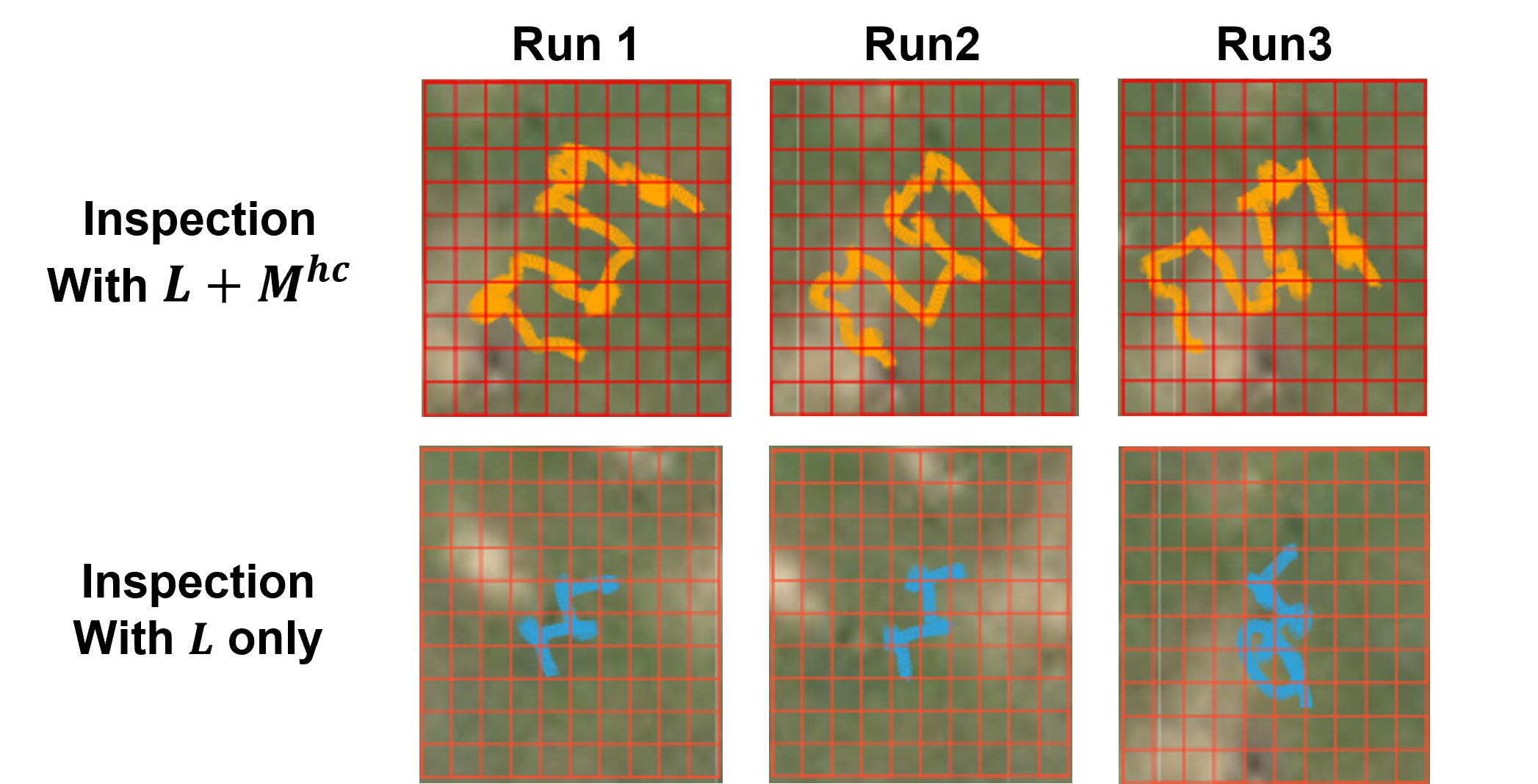

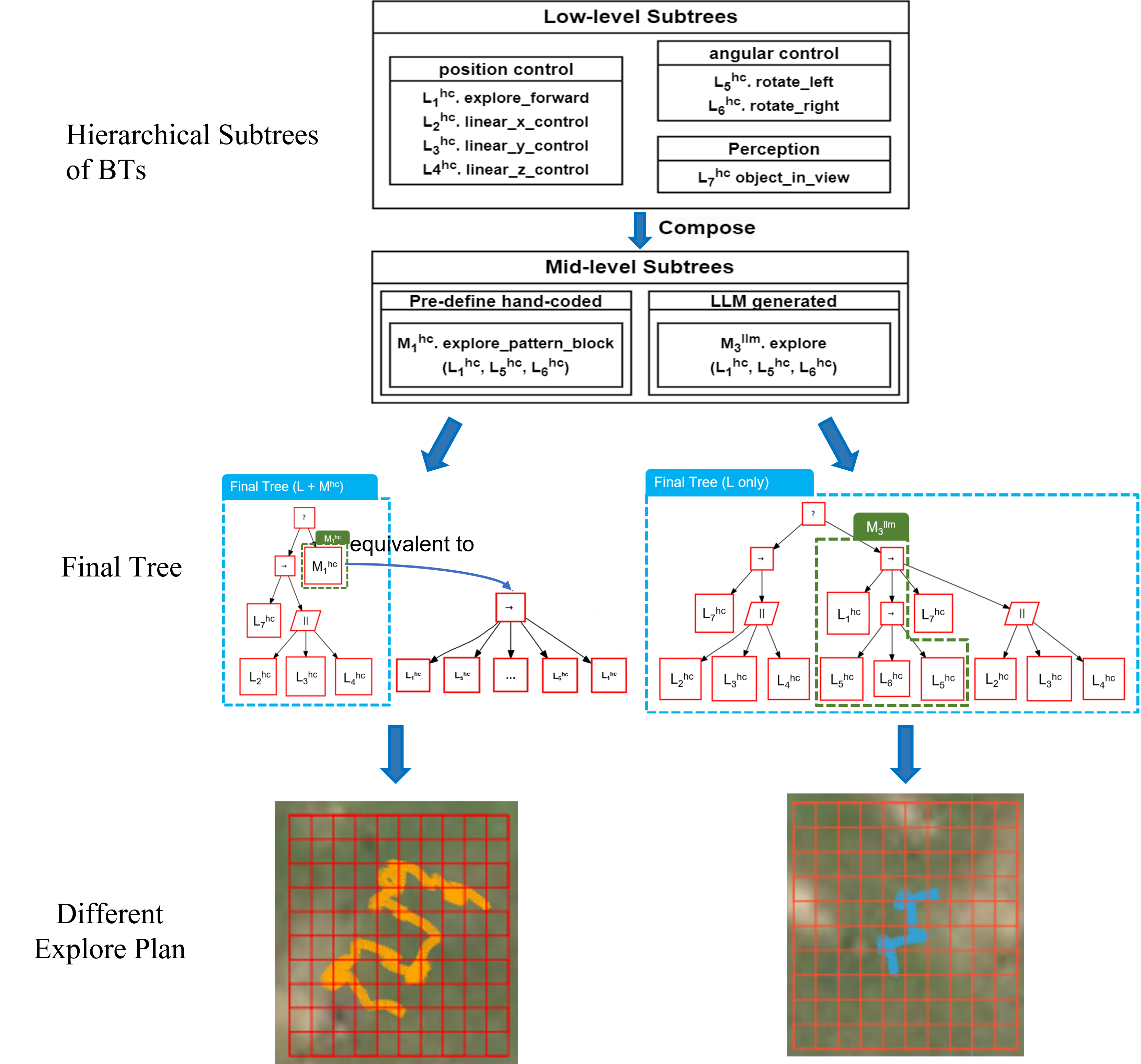

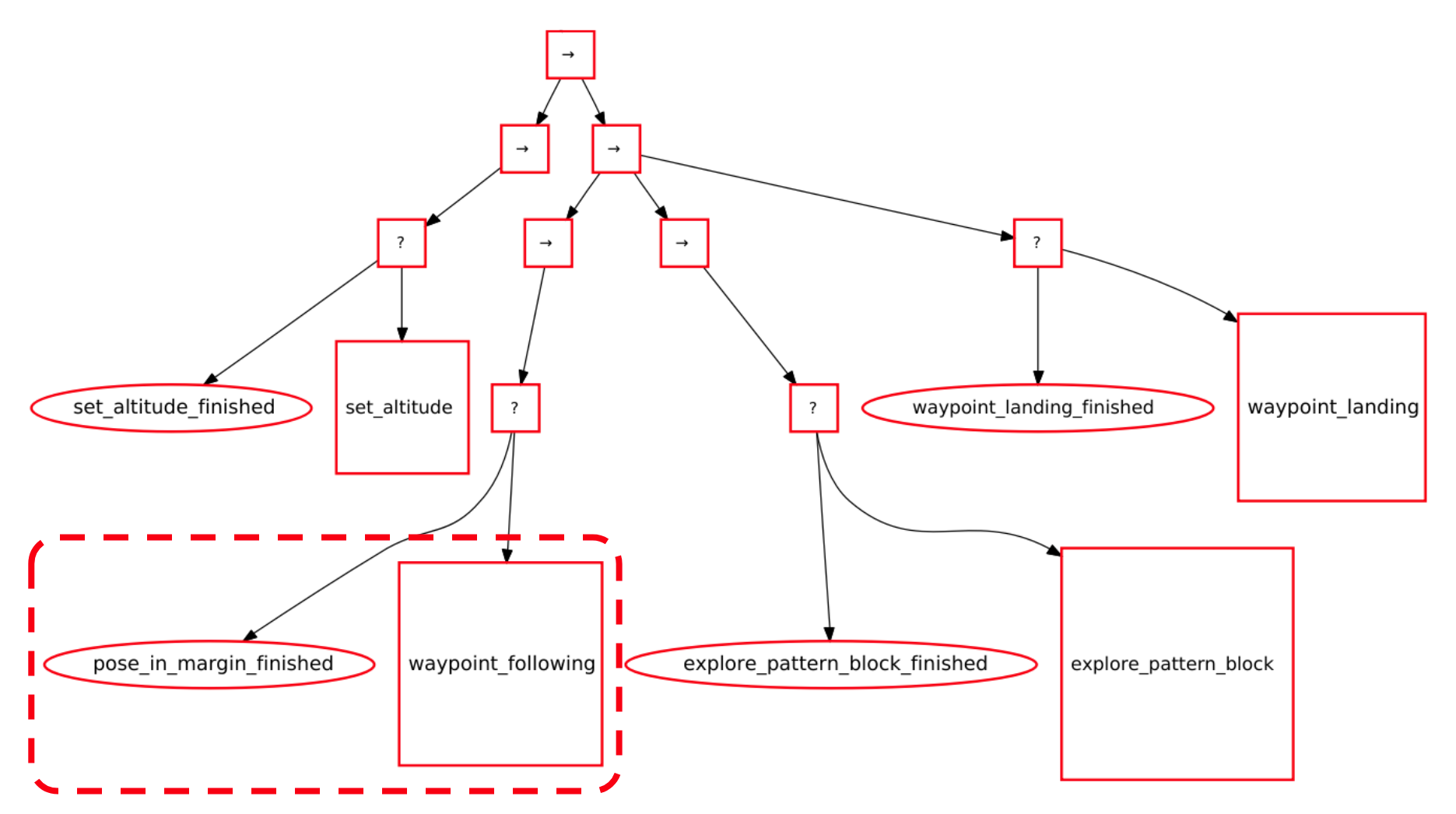

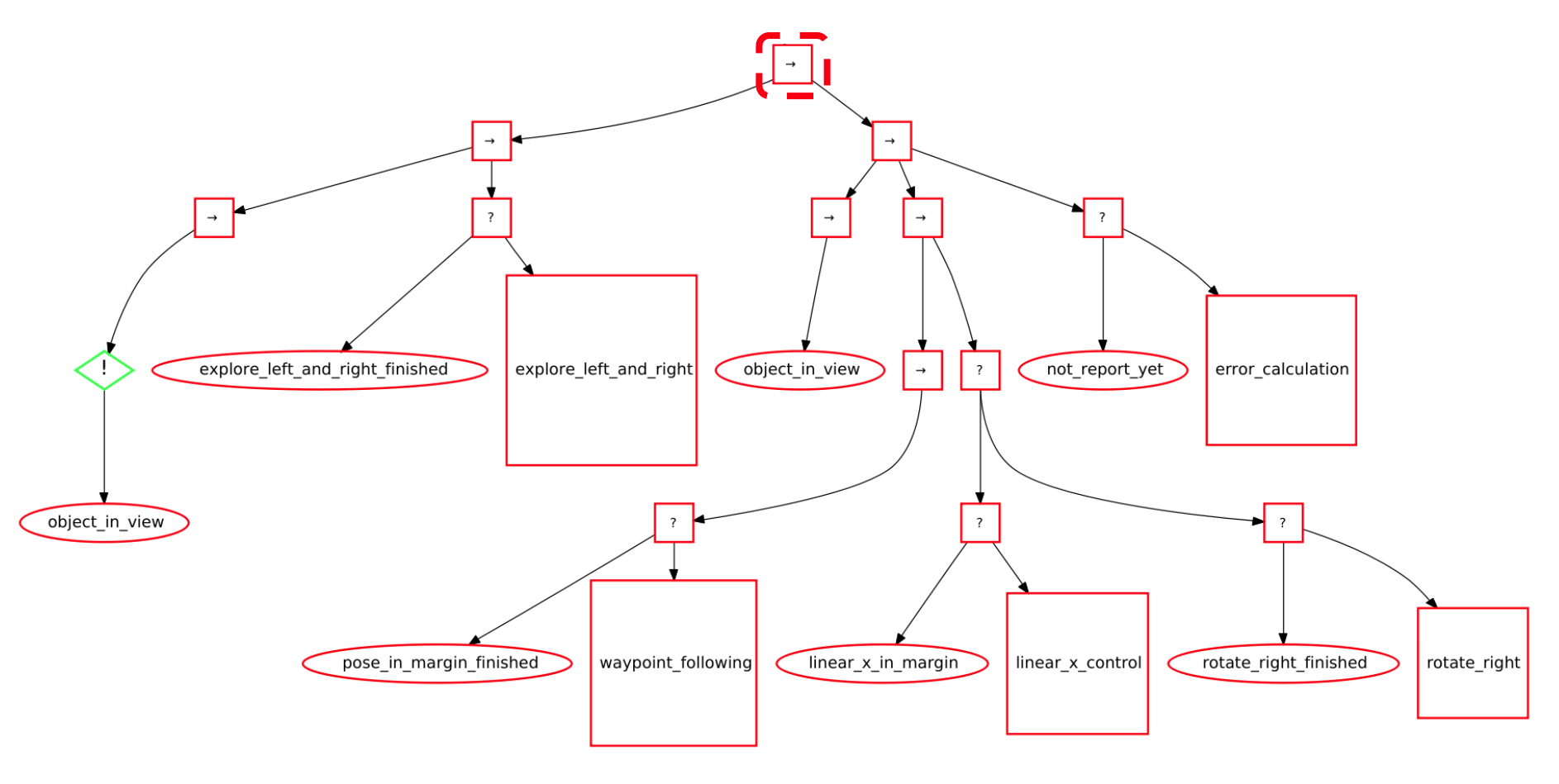

In this appendix, you can see the relationship between low-level and mid-level subtrees, as well as their combination into final BTs.Top: Hierarchical Subtrees of BTs, Low-level subtrees are primarily associated with basic position control, rotation control, and perception. Mid-level subtrees comprise multiple low-level subtrees that are either hand-coded (hc) or LLM-generated (llm).

Middle: Final tree, combination of low-level and mid-level subtrees into final tree.

Bottom: Different Explore plan, the trajectory of Mhc explore pattern block and Mllm explore subtree.

Appendix C: Executable BTs Threading and Multiprocessing Mechanisms with ROS

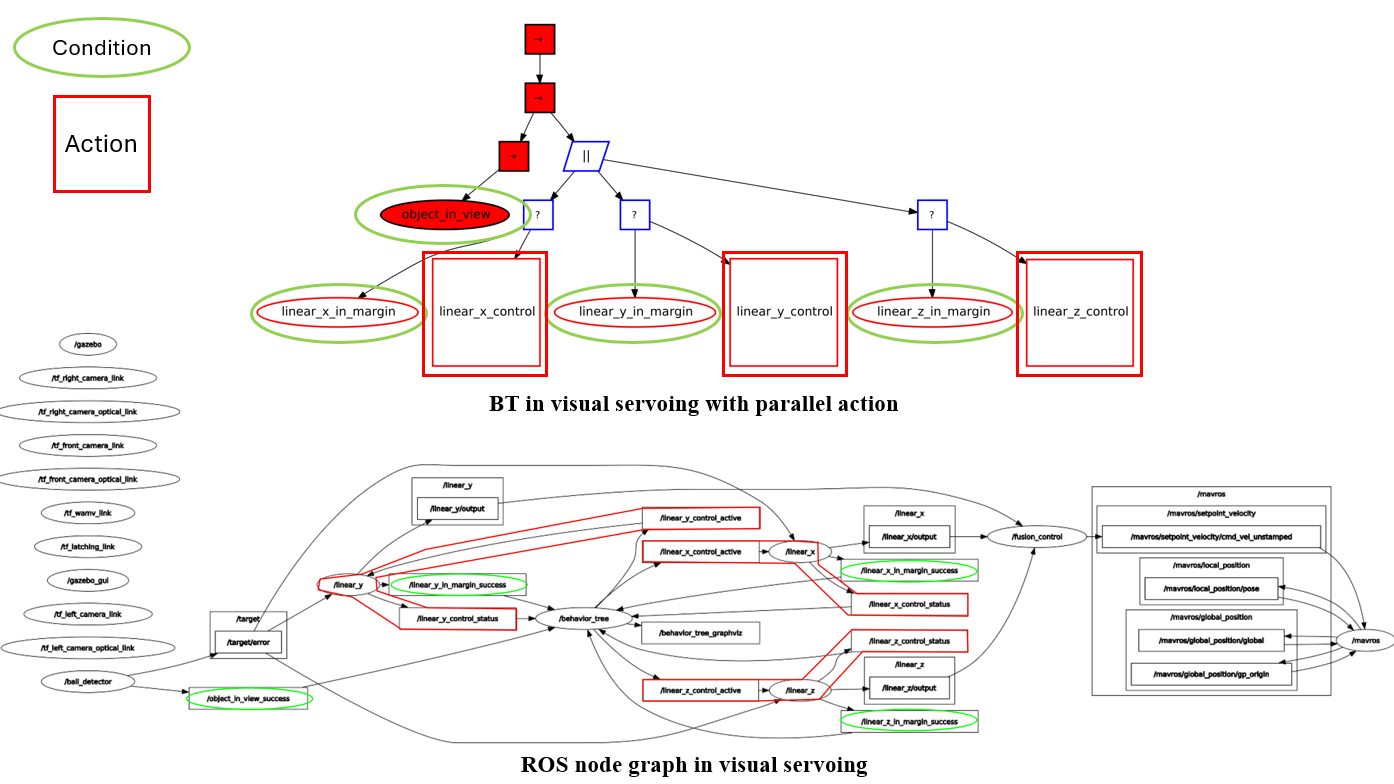

Parallel action in existing Pythonic code generation presents significant challenges due to the need for the LLM planner to generate multi-threading to handle concurrent actions. To address this, we have developed a method that combines Behavior Trees (BT) with the Robot Operating System (ROS) to facilitate parallel actions more efficiently.

As illustrated in the upper part of the figure, the BT manages the logic of visual servoing, while the lower part shows the corresponding ROS node diagram. In this setup, the condition nodes in the BT correspond to ROS topics (indicated by green circles), and the action nodes are managed by independent ROS nodes (denoted by red squares).

Specifically, in the domain of visual servoing, the controls for x, y, and z axes are implemented as three separate ROS nodes. These nodes operate in different processes facilitated by the ROS infrastructure. Consequently, the parallel logic in the BT (symbolized by the parallel operator '||') can activate these action nodes simultaneously, enabling synchronized linear control along the x, y, and z axes for visual servoing.

This approach demonstrates that parallel actions can be effectively executed using our BT-prompt within the ROS framework, showcasing the potential for enhanced multi-threading in robotics applications.

Appendix D: New Tasks in Experiment 3 (RobotX Challenge 2022)

Detect and Dock (Dock.):

In this task, there's a floating platform with three bays, each having a different color (red, green, or blue).

The AMS detects the color and docks in the bay with the matching color.

Scan the code (Scan.):

In this task, there's a three-sided light tower on a floating platform that displays a sequence of RGB lights.

The AMS observes the colors and their order and use this sequence for other tasks in later rounds.

Find and Fling (Shoot.):

In this task, there's a floating platform with three panels, each having a colored square and two holes.

The AMS detects a designated color and shoots racquetballs through the panel's holes.

Each team gets four racquetballs for this.

Entrance and Exit Gates (Gate.):

In this task, the AMS needs to go through gates marked by colored buoys and underwater beacons.

The AMS must detect the underwater beacon signals between these gates and enter through them before moving

on to other tasks. The task's complexity increases in each round and includes elements from other tasks.

The beacons have different frequencies and activate one at a time during the task.There are three gates:

Gate 1 has a red and a white buoy.

Gate 2 has two white buoys.

Gate 3 has a white and a green buoy.

Wildlife Encounter (Wildlife.):

Task involves three floating platforms that look like Australian marine animals:

a platypus, turtle, and crocodile.

The AMS detects and reacts to them using Hyperspectral Imaging (HSI) camera .

Teams can use a UAV for help. After detection, the AMS circles the platypus clockwise,

the turtle counterclockwise, and the crocodile twice in any direction.

UAV Search and Report (Search.):

In this land-based UAV task mimicking a search and rescue operation,

the UAV starts from one point, searches a marked field with orange markers, finds two objects there,

and lands at another designated point. Teams can use any search pattern within the field boundaries.

They report the objects they locate and their exact positions.

Follow the Path (Follow.):

This task requires the Autonomous Maritime System (AMS) to follow a designated path of white buoys,

pass through six pairs of red and green buoys, and exit through another set of white buoys.

The AMS must steer clear of randomly placed obstacles, symbolized by round black buoys.

Teams have the option to employ a UAV for assistance in completing this task.

UAV Replenishment (Deliver.):

This task involves using a UAV. The UAV takes off from a USV, finds a floating helipad, picks up a small colored tin,

delivers the tin to a circular target area on another floating helipad, and then returns to the USV.

Appendix E: Analysis of Failure BT Generation - Two Types of Failure

Type 1 - The inclusion of unnecessary subtrees or a lack of

necessary subtrees can result in task failure.

Adding unnecessary subtrees or lacking essential

subtrees results in task failure. When LLM generates the

”inspection” task, it incorporates the subtree related to the

red-boxed area into the task plan. However, as this subtree is

designed for ”tracking object,” it requires a target object to

fulfill the task’s completion requirement. Since the ”inspection”

task does not involve detecting objects, the behavior tree may

halt at this stage due to the absence of a target object, causing

it to be unable to progress further.

Type 2 - Misinterpretation of the logic expressed through

example tasks can result in task failure.

Misinterpretation of the logic expressed through example

tasks. When LLM generates the ”object navigation” task, it

misinterprets the logic expression symbols within the red-

boxed area (from fallback to sequence). Due to this incorrect

interpretation of the logic expression symbols, the behavior

tree is unable to transition to the tracking state even when it

detects the target object.

Appendix F: Real-World Inspection Trajectory with L and L + Mhc