Po-Jui Huang1, Yu-Ting Ko1, Yi-Chen Teng1, Hsueh-Cheng Wang1,*, Chun-Ting Huang2, Po-Ruey Lei3

________________________________________________

1National Yang Ming Chiao Tung University (NYCU), Hsinchu, Taiwan.

2Qualcomm Inc., USA

3Department of Electrical Engineering, R.O.C Naval Academy.

Abstract

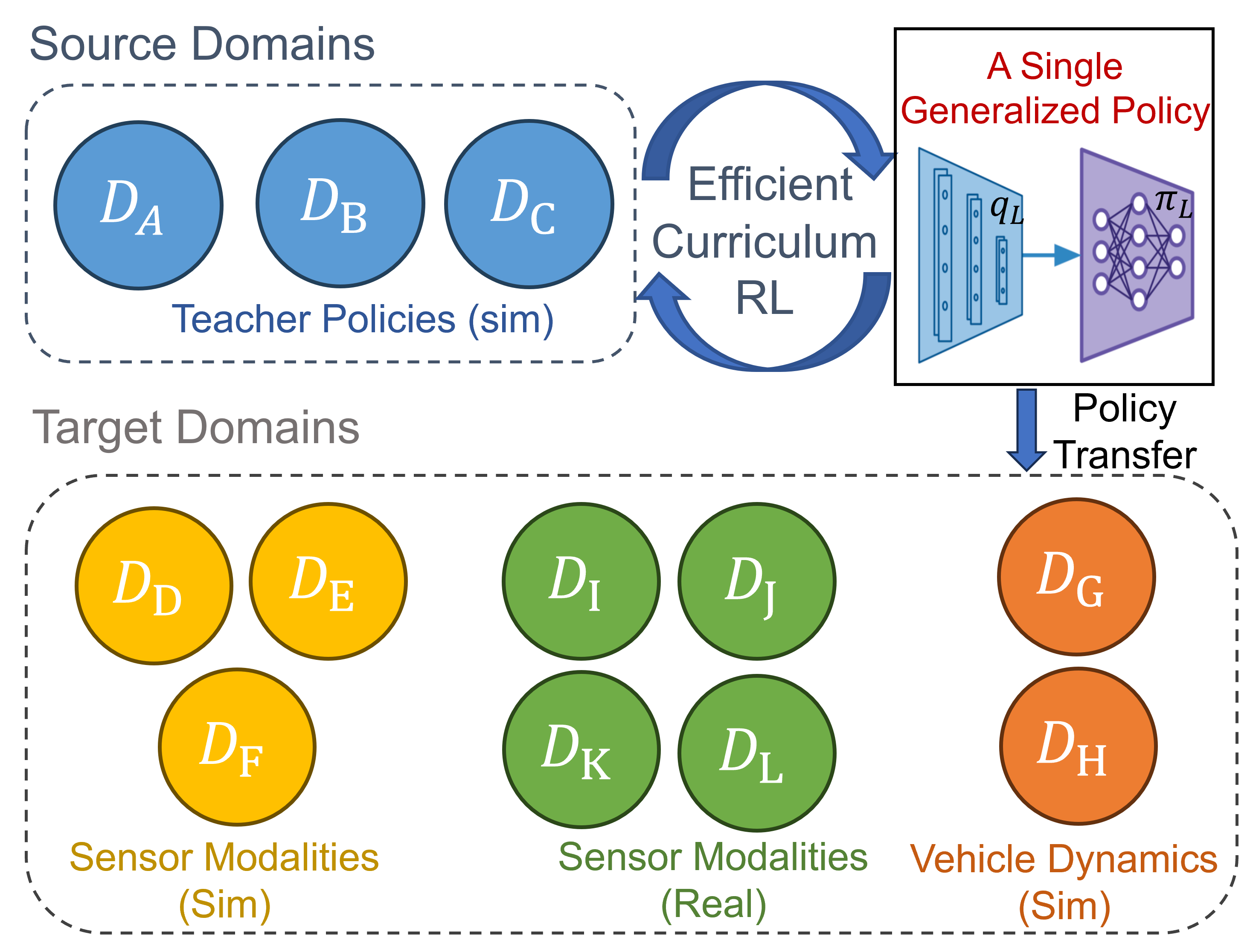

Deep reinforcement learning (RL) has proven highly effective via trial-and-error learning, empowering embodied robots with advanced sensing capabilities when navigating variable environments. However, the broad adoption of deep RL to diverse embodied robots has been hindered by the time and expense of collecting the enormous data required to learn tasks from scratch. Transfer learning may serve as a solution that allows an agent to leverage prior knowledge and experiences, and facilitates quick adaptation from source domains to target domains with efficient learning. Our objective in this study was to to derive generalized deep RL policy to facilitate the navigation of variable embodied robot transfer via the an efficient curriculum transfer learning paradigm. The policy trained from the proposed method outperformed existing policies across a range of simulations and real-world tests involving a variety of challenging scenarios, such as partial or noisy inputs, vehicle dynamics, and sim-to-real evaluations

Video

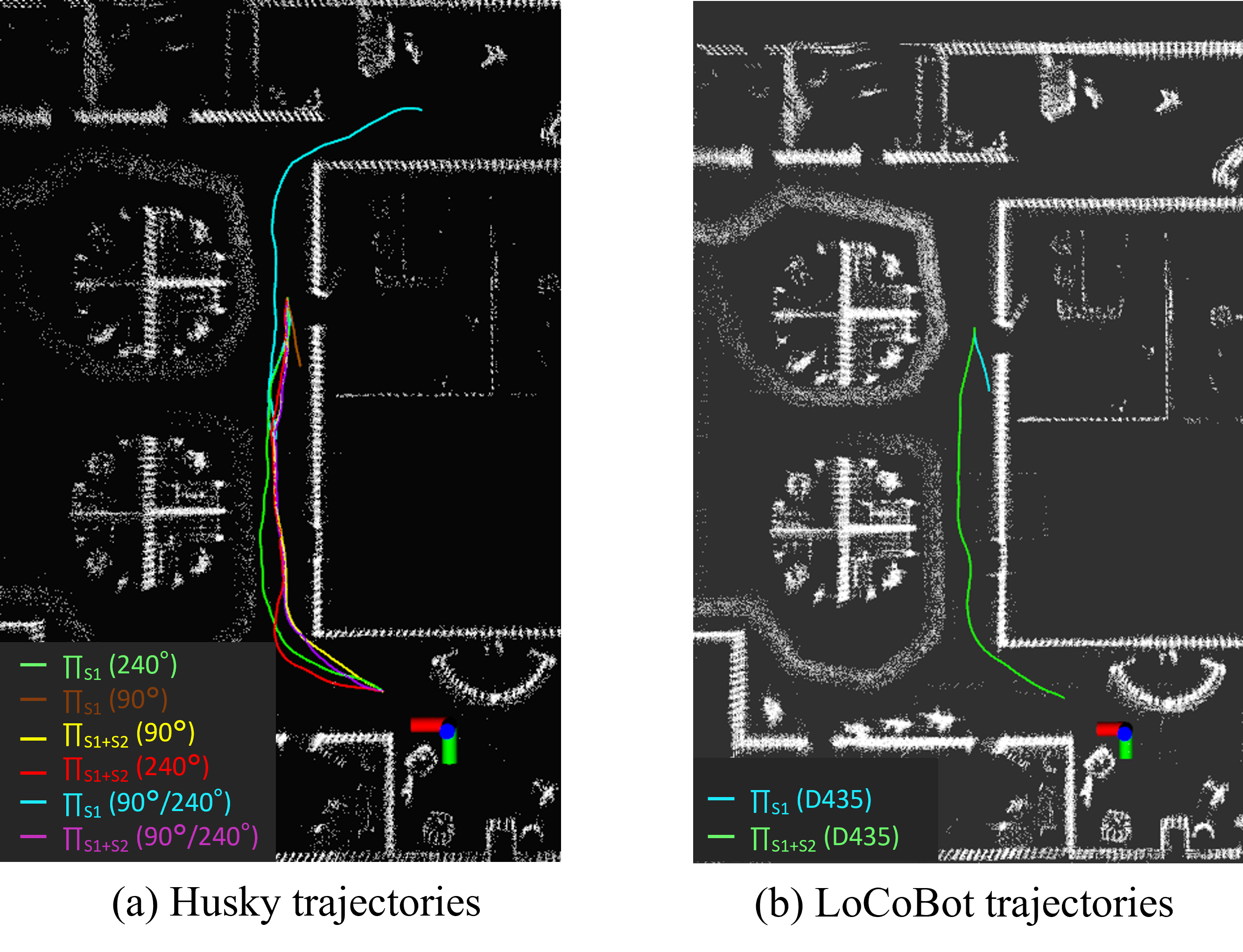

Appendix A :Evaluation trajectories for different ploicies in simulation

In (a), the curriculum policy πS1+S2 exhibits strong performance in both 240◦ and 90◦ scenarios, even when transitioning between LiDAR data observations with varying field of view. This demonstrates the ability of our proposed curriculum policy to generalize effectively across different sensor field of views. In (b), our proposed curriculum policy πS1+S2 proves to be effective in achieving good results on LoCoBot using RGBD camera as observations. This outcome serves as evidence that our curriculum policy can successfully generalize to various robots equipped with different sensor suites.

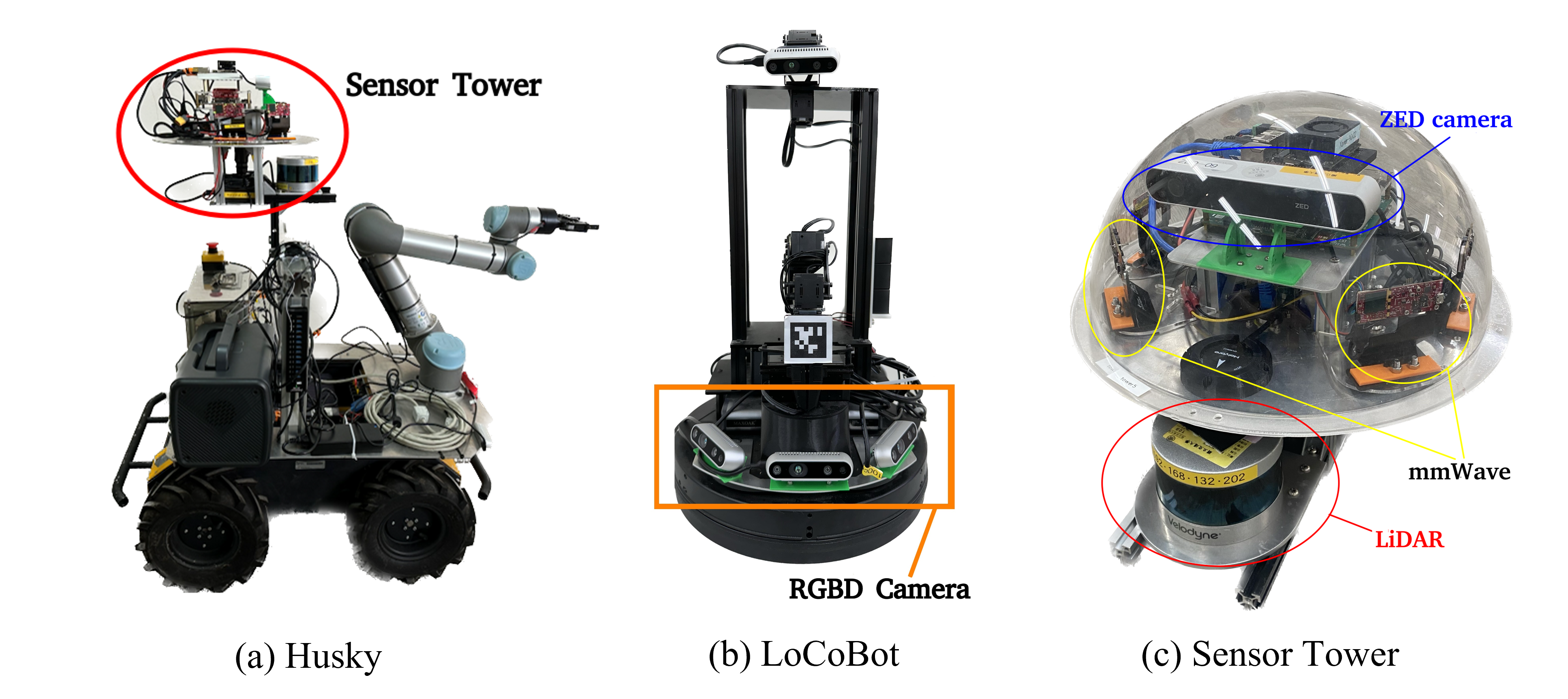

Appendix B : UGV Real Robot in Our Experiment

We conducted real-world experiments with two different robots, as illustrated in (a) and (b). For the robot in (a), we equipped it with a VLP-16 LiDAR and mmWave Radar as its primary sensors. On the other hand, for the robot in (b), we utilized a depth sensing camera, specifically the realsense D435, as its main sensor. In (c), we present our sensor tower, a comprehensive perception module housing the sensors, computing units, and power supply required for our experiments.