Ching-I Huang1, Sun-Fu Chou1, Li-Wei Liou1, Nathan Alan Moy2,

Chi-Ruei Wang1, Hsueh-Cheng Wang1, Charles Ahn2, Chun-Ting Huangand3, Lap-Fai Yu2

________________________________________________

1Institute of Electrical and Computer Engineering, National Yang Ming Chiao Tung University, Taiwan.

2Department of Computer Science, George Mason University, USA.

3Qualcomm Inc., USA

Abstract

Robots are essential for tasks that are hazardous or beyond human capabilities. However, the results of the Defense Advanced Research Projects Agency (DARPA) Sub- terranean (SubT) Challenge revealed that despite various tech- niques for robot autonomy, human input is still required in some complex situations. Moreover, heterogeneous multirobot teams are often necessary. To manage these teams, effective user interfaces to support humans are required. Accordingly, we present a framework that enables intuitive oversight of a robot team through immersive virtual reality (VR) visualizations. The framework simplifies the management of complex navigation among movable obstacles (NAMO) tasks, such as search-and- rescue tasks. Specifically, the framework integrates a simulation of the environment with robot sensor data in VR to facilitate operator navigation, enhance robot positioning, and greatly improve operator situational awareness. The framework can also boost mission efficiency by seamlessly incorporating au- tonomous navigation algorithms, including NAMO algorithms, to reduce detours and operator workload. The framework is effective for operating in both simulated and real scenarios and is thus ideal for training or evaluating autonomous navigation algorithms. To validate the framework, we conducted user studies (N = 53) on the basis of the DARPA SubT Challenge’s search-and-rescue missions.

Video

System Overview

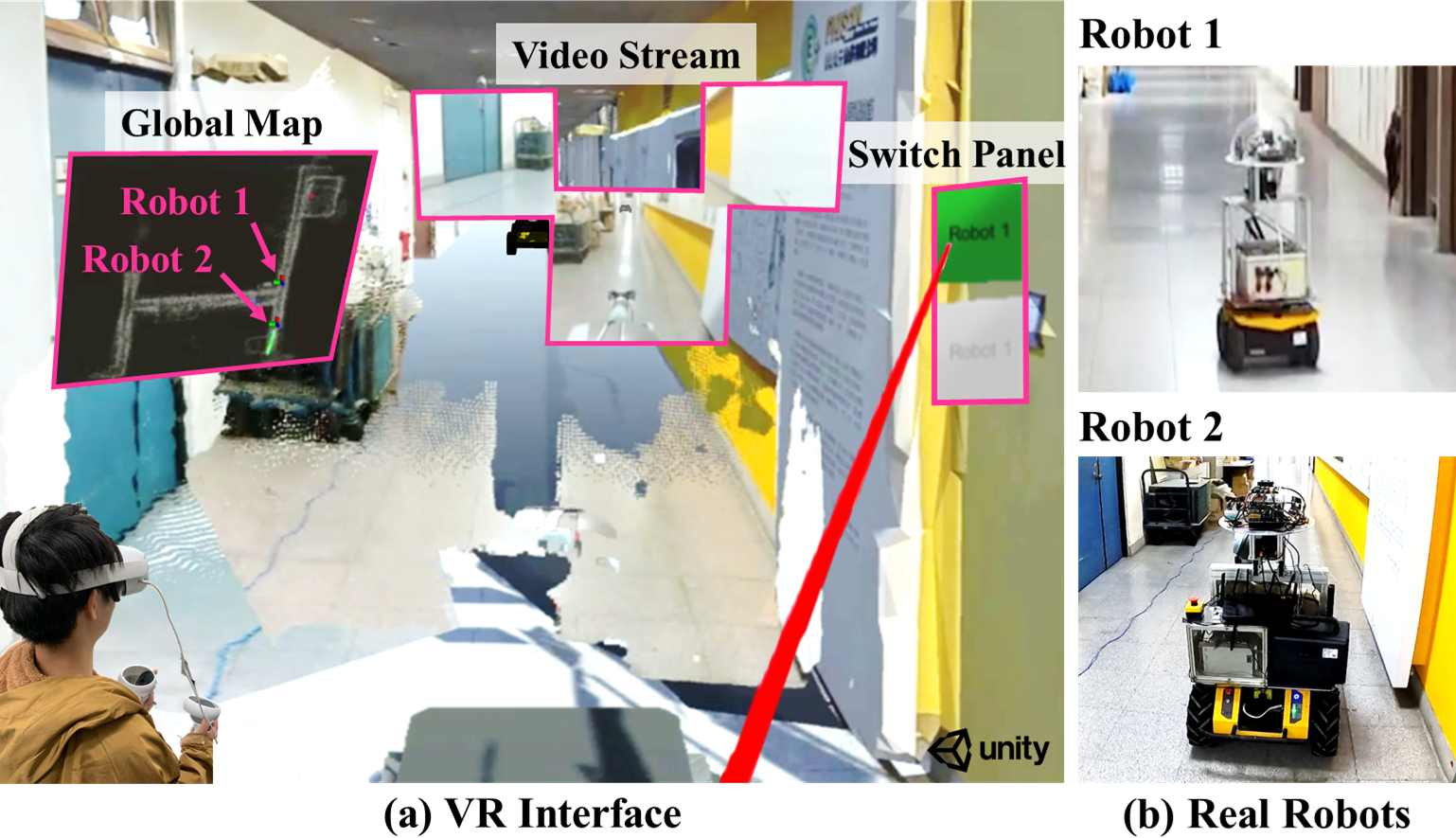

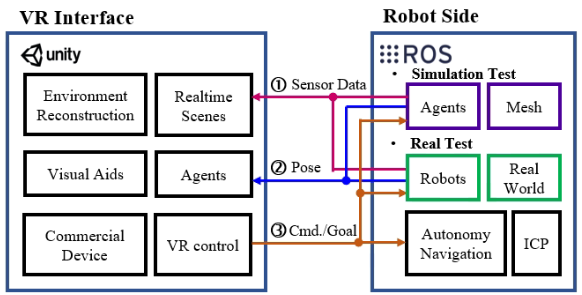

Through our integrated framework, we bolster human-robot collaboration using a VR interface, offering immersive, real-time experiences from any robot's first-person view. By harnessing rich spatial information and utilizing an intuitive control panel, users can efficiently reduce workload and craft strategic mission plans. The framework ensures smooth data exchange between the operator's VR platform (Unity) and the robot's operational environment (ROS).

Heterogeneous Robot Team

Two robots were used for testing. Jackal (left) with a 360° camera and Husky (right) with an arm (UR5) and three depth cameras. Two of these cameras covered a 3 m × 2 m forward area, and the third spanned the arm’s full 0.7-m stroke. Husky also had a LiDAR for ICP positioning.

Husky, Clearpath Robotics, Inc., Canada

UR5, Universal Robots A/S, Inc, Denmark

The Adaptivity of Different Robot

Appendix A

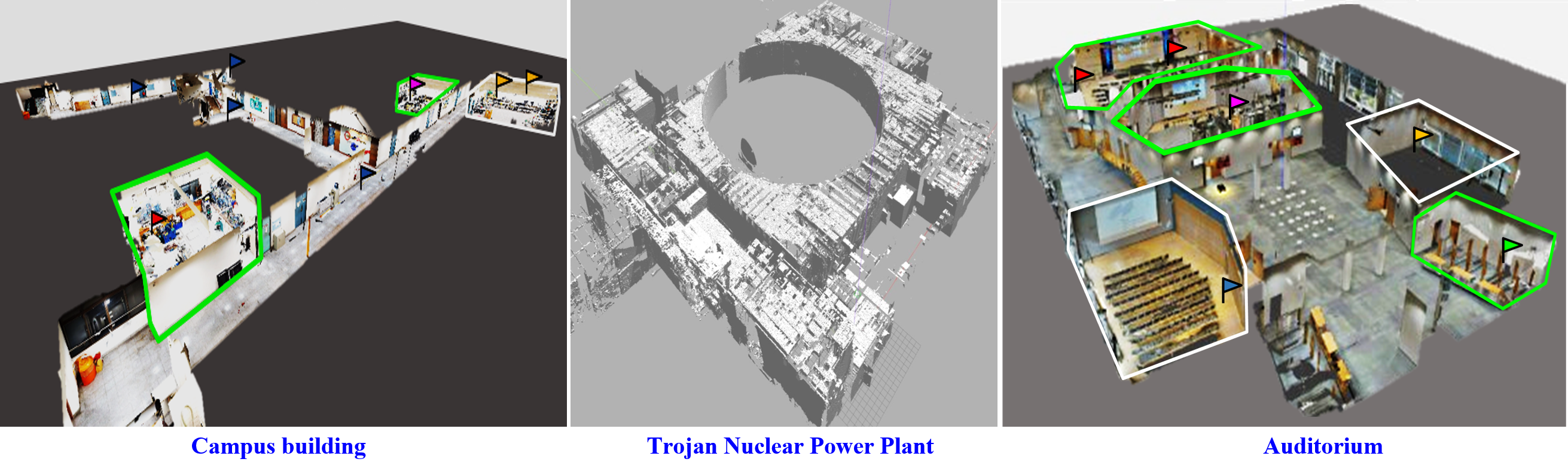

The 3D model of the environment is captured using a Matterport camera, featuring a tripod-mounted camera rig equipped with three color and three depth cameras strategically oriented —slightly up, horizontal, and slightly down. During each panorama capture, the camera rig rotates around the direction of gravity, stopping at six distinct orientations. At each stop, the camera captures an HDR photo from each of the three RGB cameras, while the three depth cameras continuously acquire data throughout the rotation. The collected depth data is then integrated to synthesize a 1280x1024 depth image aligned with each color image. For each panorama, the result is 18 RGB-D images with nearly coincident centers of projection at the height of a human observer. In this study, a single operator recorded our 3D mesh model in approximately one and a half hours. Note that the Trojan Nuclear Power Plant reconstruction is provided by DARPA, and auditorium is a sample model from Matterort Inc.

auditorium (conference center in Munich, Germany) from Matterort Inc.

Campus Building at NYCU in Taiwan

Free to download Campus Building at NYCU in Taiwan

Free to download all models and related Gazabo world

Matterport Pro2 3D Camera, Matterport, Inc., California, U.S.

Appendix B

Tutorial: how to build up the environment for teleoperation .

ROS

Unity

Appendix C

Repo for reconstruction of point clouds by RGB and D images in Unity platform.

Point-cloud streaming (PCS)