Ching-I Huang, Sun-Fu Chou, Li-Wei Liou, Quang TN Vo, Nathan, Yu-Ting Ko, Lap-Fai Yu, and Hsueh-Cheng Wang

Abstract

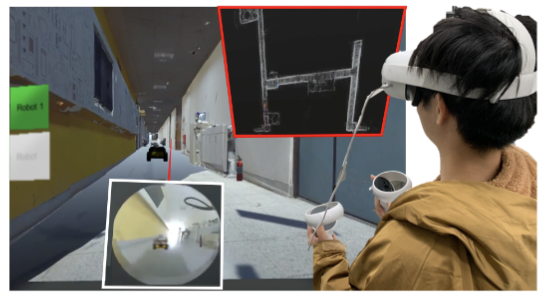

In recent decades, robots are capable of assisting or carrying out the works human cannot in the hazardous areas, such work frequently aligned with search-and-rescue missions in the Defense Advanced Research Projects Agency (DARPA) Subterranean (SubT) Challenge. Although many autonomy techniques have been developed and demonstrated in the competition, some of the complex situations and operations still require experienced decisions from human that the robots are not allowed to execute without the supervision. It is still an open question how to effectively test and support the development of the heterogeneous multi-robot team that requires human interactions. Our work propose a simulation framework that the human supervisor uses a commercial virtual reality (VR) interfaces while stationed at the base. The robot team, two unmanned ground vehicles (one is twice as fast as the other which is equipped a robot arm) located remotely, performs search and rescue in a navigation among movable objects(NAMO) scenario where navigation path may be blocked by movable obstacles that require additional a manipulator to push them away. We carried out behavioral studies of 24 users participated in this study and they were tested under the proposed virtual environments of Unity and Gazebo evaluation framework. We showed that the proposed framework is cost-effective and well-suited for human interactions including: 1) the efficiency of single-user control of multiple heterogeneous robots with that of multi-user control of multiple heterogeneous robots, and 2) the collaborative navigation by users and multiple heterogeneous robots with autonomous algorithms (navigation- only vs. capabilities to push-away obstacles), which affect the user’s strategy